As printed out in the interactive session window, Spark context Web UI available at The URL is based on the Spark default configurations. When a Spark session is running, you can view the details through UI portal. In this website, I’ve provided many Spark examples. Run Spark Pi example via the following command: run-example SparkPi 10 The interface looks like the following screenshot:īy default, Spark master is set as local in the shell. Run the following command to start Spark shell: spark-shell Now let's do some verifications to ensure it is working. The first configuration is used to write event logs when Spark application runs while the second directory is used by the historical server to read event logs. These two configurations can be the same or different. There are many other configurations you can do. Make sure you add the following line: localhost Run the following command to create a Spark default config file: cp $SPARK_HOME/conf/ $SPARK_HOME/conf/nfĮdit the file to add some configurations use the following commands: vi $SPARK_HOME/conf/nf Source ~/.bashrc Setup Spark default configurations

# Source the modified file to make it effective: # Configure Spark to use Hadoop classpathĮxport SPARK_DIST_CLASSPATH=$(hadoop classpath) bashrc file: vi ~/.bashrcĪdd the following lines to the end of the file: export SPARK_HOME=~/hadoop/spark-3.0.0 We also need to configure Spark environment variable SPARK_DIST_CLASSPATH to use Hadoop Java class path. Setup SPARK_HOME environment variables and also add the bin subfolder into PATH variable. The Spark binaries are unzipped to folder ~/hadoop/spark-3.0.0. Tar -xvzf spark-3.0.0-bin-without-hadoop.tgz -C ~/hadoop/spark-3.0.0 -strip 1 Unpack the package using the following command: mkdir ~/hadoop/spark-3.0.0

#YUM INSTALL SPARK UBUNTU DOWNLOAD#

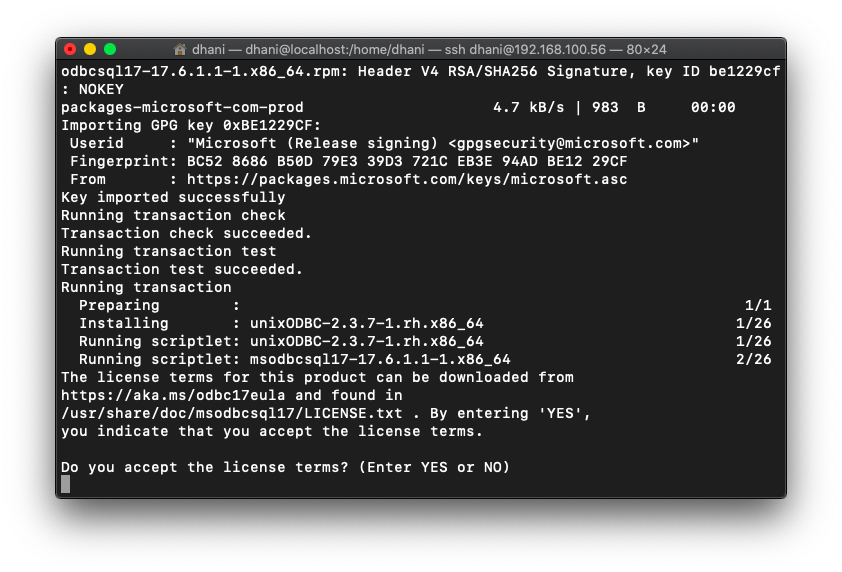

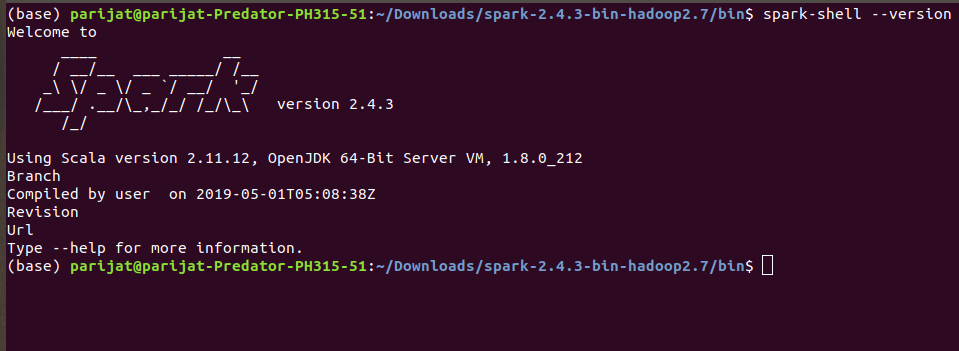

Visit Downloads page on Spark website to find the download URL.ĭownload the binary package using the following command: wget Unpack the binary package Now let’s start to configure Apache Spark 3.0.0 in a UNIX-alike system. OpenJDK 64-Bit Server VM (build 25.212-b03, mixed mode) Run the following command to verify Java environment: $ java -version In the Hadoop installation articles, it includes the steps to install OpenJDK. Java JDK 1.8 needs to be available in your system.

#YUM INSTALL SPARK UBUNTU WINDOWS 10#

Install Hadoop 3.3.0 on Windows 10 using WSL.If you choose to download Spark package with pre-built Hadoop, Hadoop 3.3.0 configuration is not required.įollow one of the following articles to install Hadoop 3.3.0 on your UNIX-alike system: Thus we need to ensure a Hadoop environment is setup first. This article will use Spark package without pre-built Hadoop. If you are planning to configure Spark 3.0 on WSL, follow this guide to setup WSL in your Windows 10 machine: Install Windows Subsystem for Linux on a Non-System Drive Hadoop 3.3.0 Prerequisites Windows Subsystem for Linux (WSL)

These instructions can be applied to Ubuntu, Debian, Red Hat, OpenSUSE, MacOS, etc. This article provides step by step guide to install the latest version of Apache Spark 3.0.0 on a UNIX alike system (Linux) or Windows Subsystem for Linux (WSL).

0 kommentar(er)

0 kommentar(er)